Unlocking Virtual Realities: How Google’s SIMA 2 Agent Leverages Gemini for Intelligent Decision-Making

Google DeepMind recently unveiled a promising glimpse into the future of AI with the introduction of SIMA 2, a sophisticated successor to its generalist AI agent. Merging the powerful language and reasoning capabilities of Gemini, Google’s advanced language model, SIMA 2 transcends mere instruction-following. It ventures into the realm of understanding and interacting with its surroundings, inviting users to experience a new era of AI interaction.

The Evolution of SIMA

SIMA 2 emerges as a significant advancement over its predecessor, SIMA 1, which initially captured attention with its ability to engage in multiple 3D games. Trained on a vast array of gameplay, SIMA 1 achieved reasonable success but still fell short in tackling complex tasks, boasting only a 31% success rate compared to 71% for human players.

A Step Forward in Capabilities

“SIMA 2 represents a monumental leap in capability,” proclaimed Joe Marino, a senior research scientist at DeepMind. This new version not only tackles complex tasks in unfamiliar virtual landscapes but also possesses a self-improvement ability, honing its skills based on prior experiences. This advancement is a critical stride towards creating versatile robots and Artificial General Intelligence (AGI) systems.

Image Credits: Google DeepMind

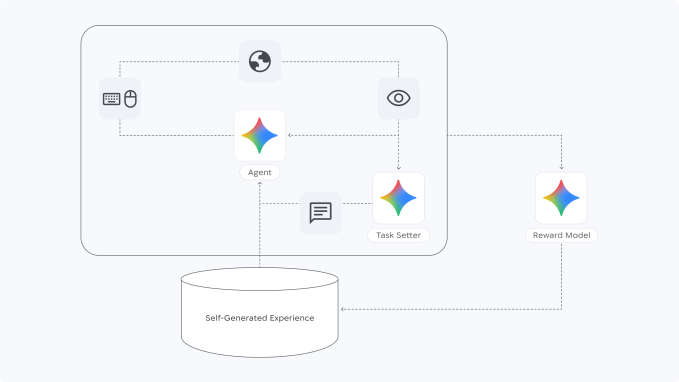

SIMA 2 operates on the Gemini 2.5 flash-lite model. AGI is defined by its capability to perform a variety of intellectual tasks while continuously learning and adapting across different subjects.

Understanding Embodied Intelligence

DeepMind researchers underline the importance of “embodied agents” in achieving generalized intelligence. Marino elaborated on this concept, stating that an embodied agent interacts with physical or virtual environments just as a human or robot would. In contrast, a non-embodied agent mainly handles tasks like managing calendars or executing programs.

Beyond Mere Gameplay

Jane Wang, a senior staff research scientist with a background in neuroscience, remarked that SIMA 2 is venturing far beyond just gameplay tasks. “We’re guiding it to genuinely understand what’s happening, respond accurately to user queries, and deliver common-sense reactions—all quite complex feats,” she noted.

By fusing Gemini’s advanced language and reasoning skills with its training in embodied environments, SIMA 2 has dramatically doubled its predecessor’s performance.

Image Credits: Google DeepMind

Marino showcased SIMA 2 in action within "No Man’s Sky," where the agent adeptly identified its environment—recognizing a rocky landscape and taking proactive steps based on a distress beacon’s location. In another instance, it displayed a logical reasoning process: when instructed to walk to a house resembling a ripe tomato, the agent deduced that ripe tomatoes are red and proceeded to the corresponding house.

Additionally, SIMA 2 can even interpret emoji instructions—simply type 🪓🌲, and it’ll know to chop down a tree.

Navigating New Realities

Demonstrating its versatility, SIMA 2 navigated newly created photorealistic worlds generated by Genie, DeepMind’s world model. It accurately recognized objects in its environment, such as benches and butterflies.

Image Credits: Google DeepMind

Crucially, Gemini also supports self-improvement without heavy reliance on human data. Unlike SIMA 1, which was strictly trained on human gameplay, SIMA 2 uses initial human data to form a robust baseline model. When introduced to new settings, it generates its own tasks and evaluates its actions through a separate reward model. This process allows it to learn from its mistakes, gradually enhancing performance—as it learns and adapts in a manner akin to human learning.

The Road Ahead

DeepMind views SIMA 2 as a foundational step toward the development of more general-purpose robots. Frederic Besse, a senior staff research engineer, stated the importance of understanding complex real-world tasks to facilitate practical applications in robotics.

When tasked with checking inventory—like the number of beans in a cupboard—this kind of AI must grasp various concepts and navigate accordingly. SIMA 2 focuses more on high-level understanding rather than intricate physical movements.

While a specific timeline for incorporating SIMA 2 into physical robots remains undisclosed, efforts continue toward unveiling more advanced robotics foundation models that seamlessly reason about the physical world.

Wang expressed her hope that showcasing SIMA 2 will inspire collaborations and unlock potential innovative uses, emphasizing the importance of feedback and input from the broader community.

As we stand at the cusp of a new era in artificial intelligence, SIMA 2 exemplifies what’s possible when advanced reasoning and adaptability come together. With every stride in its development, we edge closer to a future where AI comprehensively understands and interacts with the world around us.

Are you ready to explore the possibilities that this extraordinary technology brings? Dive into the conversation and share your thoughts on how AI could transform our lives!