Meta and Oracle Opt for NVIDIA Spectrum-X to Transform AI Data Centers

Meta and Oracle are on the brink of a significant transformation, enhancing their AI infrastructures with NVIDIA’s Spectrum-X Ethernet networking switches. This cutting-edge technology is set to revolutionize the way large-scale AI systems operate, promising improved training efficiency and quicker deployment across expansive compute clusters. As both companies embrace this innovative open networking framework, the implications for the future of AI are substantial.

Jensen Huang, the visionary founder and CEO of NVIDIA, likens the emergence of trillion-parameter models to the evolution of data centers into “giga-scale AI factories.” He emphasizes that Spectrum-X serves as the vital “nervous system,” seamlessly connecting millions of GPUs to facilitate the training of the most sophisticated models ever developed.

Revolutionizing AI Infrastructure

Oracle plans to leverage the capabilities of Spectrum-X Ethernet within its Vera Rubin architecture, creating advanced AI factories. Mahesh Thiagarajan, Oracle Cloud Infrastructure’s EVP, highlights that this new framework will enable the efficient connection of millions of GPUs, thereby accelerating the training and deployment of new AI models.

Meta, too, is ramping up its AI framework by integrating Spectrum-X Ethernet switches into the Facebook Open Switching System (FBOSS), its proprietary platform for managing network switches at scale. Gaya Nagarajan, VP of Networking Engineering at Meta, notes that an open and efficient network is essential for supporting larger AI models and delivering optimal services to billions of users.

Building Flexible AI Systems

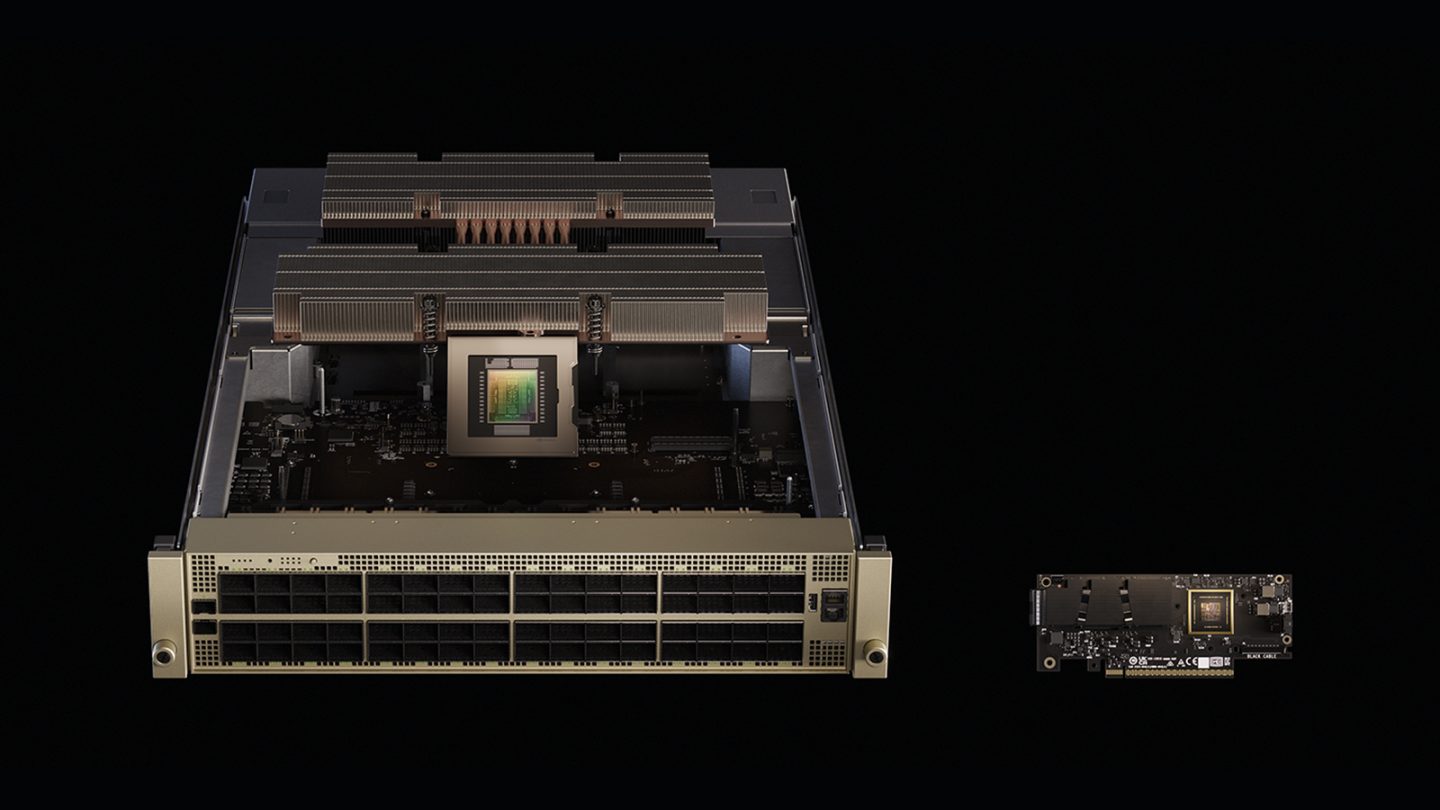

Flexibility is paramount as data centers evolve to meet increasing demands. Joe DeLaere, who leads NVIDIA’s Accelerated Computing Solution Portfolio for Data Centers, points out that NVIDIA’s MGX system features a modular design that allows partners to easily mix and match CPUs, GPUs, storage, and networking components.

This system not only promotes interoperability across different hardware generations but also enhances time-to-market and future readiness. "It empowers organizations with the flexibility they need to adapt," DeLaere shares.

As AI models expand, energy efficiency becomes a pivotal concern. NVIDIA is addressing these challenges “from chip to grid,” working closely with power and cooling vendors to optimize performance and scalability. One critical advancement is the shift to 800-volt DC power delivery, which minimizes heat loss and maximizes efficiency, potentially reducing peak power requirements by up to 30%.

Scaling Data Centers Effectively

NVIDIA’s MGX system is pivotal in how data centers scale. Gilad Shainer, NVIDIA’s SVP of Networking, explains that MGX racks accommodate both compute and switching components, supporting NVLink for scale-up connectivity and Spectrum-X Ethernet for scale-out growth.

Furthermore, MGX facilitates the interconnection of multiple AI data centers into a cohesive system—an essential requirement for companies like Meta, especially for extensive distributed AI training operations. Depending on distance requirements, they can interconnect sites through dark fiber or utilize additional MGX-based switches for high-speed regional connections.

The adoption of Spectrum-X by Meta underscores the increasing significance of open networking. While utilizing FBOSS as its network operating system, it also supports competitors like Cumulus, SONiC, and Cisco’s NOS, allowing scalability tailored to specific environmental requirements.

Expanding the AI Ecosystem

NVIDIA views Spectrum-X as a catalyst for making AI infrastructure more accessible and efficient. Designed specifically for AI workloads, it boasts up to 95% effective bandwidth, far surpassing traditional Ethernet performance.

Shainer notes that partnerships with industry leaders like Cisco and Oracle Cloud Infrastructure are instrumental in extending the reach of Spectrum-X, ensuring that it meets the needs of a diverse array of environments—from hyperscalers to enterprises.

Anticipating the Future: Vera Rubin and Beyond

The anticipated Vera Rubin architecture from NVIDIA is set to debut in the latter half of 2026, with the Rubin CPX product following by year’s end. These innovations will work in tandem with Spectrum-X and MGX systems, paving the way for the next generation of AI factories.

DeLaere clarifies that both Spectrum-X and XGS utilize the same core hardware but are optimized for different functionalities—Spectrum-X for intra-data center communication and XGS for inter-data center connectivity, effectively minimizing latency and enabling collaborative operations across multiple sites.

Collaborating for Sustainable Power Solutions

To facilitate the transition to 800-volt DC, NVIDIA collaborates with partners throughout the power chain. From chip components with Onsemi and Infineon to rack solutions with Delta and Flex, and data center designs with Schneider Electric and Siemens, this collaborative effort embodies a holistic approach to AI infrastructure.

DeLaere articulates this as a “holistic design from silicon to power delivery,” ensuring all components function synergistically within high-density AI environments operated by industry giants like Meta and Oracle.

Performance Advantages for Hyperscalers

Spectrum-X Ethernet stands out as a specialized solution for distributed computing and AI workloads. Its advanced features, such as adaptive routing and telemetry-based congestion control, effectively eliminate network hotspots and ensure consistent performance.

Shainer emphasizes that Spectrum-X enables faster training and inference, allowing multiple workloads to operate simultaneously without interference—ideal for large organizations managing increasing AI training demands.

Harmonizing Hardware and Software

While NVIDIA is often recognized for its hardware advancements, DeLaere stresses the equal importance of software optimization. The company focuses on co-design strategies that align hardware with software development, thereby maximizing efficiency within AI systems.

NVIDIA continues to innovate with FP4 kernels, frameworks like Dynamo and TensorRT-LLM, and algorithms such as speculative decoding, all designed to enhance throughput and AI model performance over time. Such improvements ensure systems like Blackwell remain at the forefront of reliable, high-performing AI for organizations like Meta.

Networking for the Trillion-Parameter Era

The Spectrum-X platform, which incorporates Ethernet switches and SuperNICs, is NVIDIA’s pioneering Ethernet system tailored specifically for AI workloads. Its design aims to interconnect millions of GPUs effectively while maintaining consistent performance across AI data centers.

Achieving up to 95% data throughput with congestion-control technology, Spectrum-X significantly outshines standard Ethernet, which usually achieves only about 60% due to flow collisions. Additionally, its XGS technology allows for long-distance connections, seamlessly uniting regional data centers into centralized "AI super factories."

By integrating NVIDIA’s entire ecosystem—GPUs, CPUs, NVLink, and software—Spectrum-X provides the robust performance necessary to support trillion-parameter models and the burgeoning wave of generative AI workloads.

The era of AI is evolving rapidly, and the strides made by companies like NVIDIA, Meta, and Oracle are paving the way for a future where technology not only meets demands but exceeds expectations. Are you ready to embrace the momentum of this transformation? Let’s move forward together into a world where AI not only changes industries but enhances our daily lives.