Anthropic: The AI Behind Office and Copilot Warns of Easy Derailment Risks

Understanding AI Vulnerabilities: The Shocking Findings from Anthropic

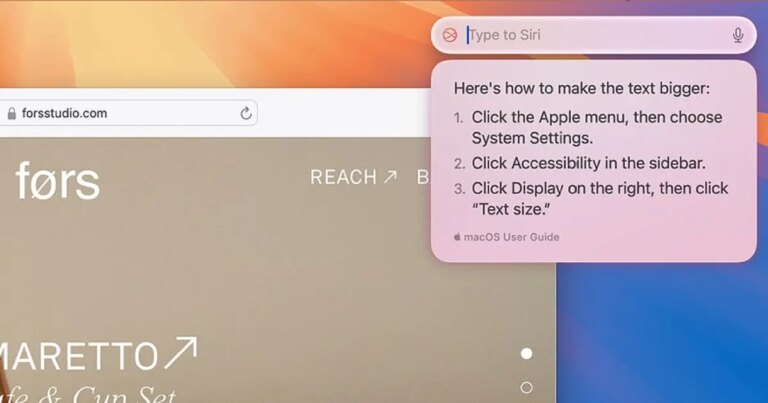

In today’s fast-evolving landscape of artificial intelligence, the unexpected can often lead to chilling revelations. Recent findings by Anthropic, the innovative company behind the Claude AI models, have raised eyebrows and sparked conversations in the tech world. Their new research, conducted alongside the UK AI Security Institute and The Alan Turing Institute, unveils startling insights into how easily AI systems can be compromised—often with just a handful of malicious files.

The Core Discovery

The study reveals a startling truth about large language models (LLMs): they can be effectively corrupted without the need for extensive datasets or advanced hacking skills. Here’s a closer look at what the researchers uncovered:

-

Minimal Requirement for Malicious Files: Just 250 carefully crafted files can corrupt an AI model, allowing for easy manipulation and backdoor access.

-

Model Size Doesn’t Equal Safety: Surprisingly, the research showed that even large models with billions of parameters are just as prone to data poisoning as their smaller counterparts.

- The Mechanism of Attacks: This attack method, known as a “denial-of-service backdoor,” triggers nonsensical outputs when the model encounters specific tokens, rendering it practically useless.

Nadeem Sarwar / Digital Trends

Why This Matters

The implications of these findings are significant for anyone relying on AI technology. Larger models, often considered safer, prove to be equally vulnerable. Here’s why you should pay attention:

-

The success of an attack hinges more on the number of corrupted files than the actual size of the training dataset.

- An attacker doesn’t need to manipulate vast amounts of data—just a few cleverly designed malicious files can wreak havoc.

Nadeem Sarwar / Digital Trends

Broader Implications for Consumers and Businesses

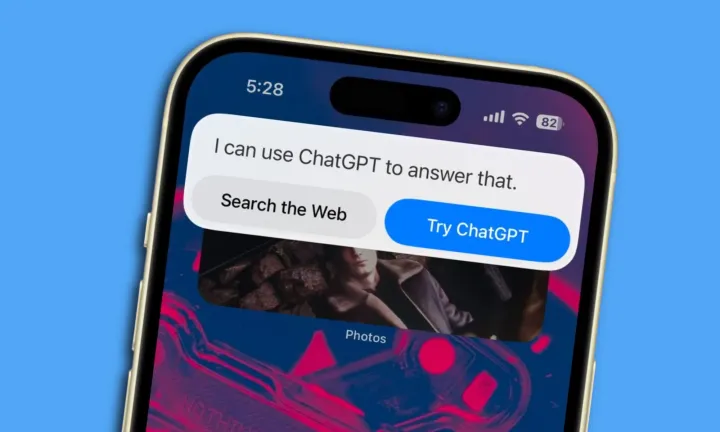

As AI becomes integral to our daily lives, integrating tools such as Anthropic’s Claude and OpenAI’s ChatGPT, the risk posed by these vulnerabilities grows. Consider this:

-

If AI models start malfunctioning due to data poisoning, user trust can plummet, affecting widespread adoption.

-

Businesses that depend on AI for critical functions like financial forecasting may find themselves vulnerable, risking severe operational disruptions.

- As AI technologies evolve, so will the tactics used by attackers. This underscores the urgent need for robust detection and training protocols to safeguard against such threats.

In an age where AI is increasingly entwined with our professional and personal lives, it’s crucial to stay informed and vigilant. The lessons from Anthropic’s study are a driving force behind the evolution of safer AI practices.

As you navigate this dynamic landscape, consider how you can engage with or influence developments in AI safety. We invite you to share your thoughts, experiences, or questions about AI vulnerabilities. Together, we can foster a more informed and secure future in technology.